In a groundbreaking live stream on May 13 at 6:00 PM Dubai time, OpenAI unveiled their revolutionary model, . This cutting-edge AI can engage with users through voice and video seamlessly, with virtually no latency. GPT-4o is capable of understanding voice tone, cracking jokes, responding with near-human intonation, translating in real-time, and even belting out a tune.

What’s even more impressive is that GPT-4o comes at a lower cost than its predecessor, GPT-4 Turbo, which pales in comparison to GPT-4o’s capabilities. How is this feat possible? What are the current abilities of this model, and what makes GPT-4o OpenAI’s first true multimodal creation? We’ll explore these questions in depth and also uncover some of the most intriguing Easter eggs from Altman…

Unveiling Capabilities

Leading up to the presentation, OpenAI CEO Sam Altman and some of his engineers were actively stoking the audience’s curiosity, dropping hints on social media about the impending release. Most of these teasers were nods to the film “Her,” where the protagonist develops feelings for an AI system. As it turns out, GPT-4o bears a striking resemblance to Samantha from the movie, with her ability to converse with “lifelike” intonations, display a sense of humor, and respond at human speed. Interacting with GPT-4o can truly make you believe you’re conversing with a living, breathing person.

Eerie? Just a tad.

isn’t just a conversationalist; it’s also a skilled translator. The developers have made significant strides in enhancing the model’s proficiency in dialogues across a wide array of languages beyond English, now supporting over 50 languages. Furthermore, the model’s memory has been bolstered, allowing it to retain all previous conversations with the user and develop a deeper understanding of you. Once again, a bit unnerving, isn’t it?

In terms of English language and coding capabilities, the release blog post indicates that GPT-4o is on par with GPT-4 Turbo. However, they’re likely being modest here. It was evident a week prior that this model would be a programming powerhouse. But let’s not jump ahead. First, a quick look back.

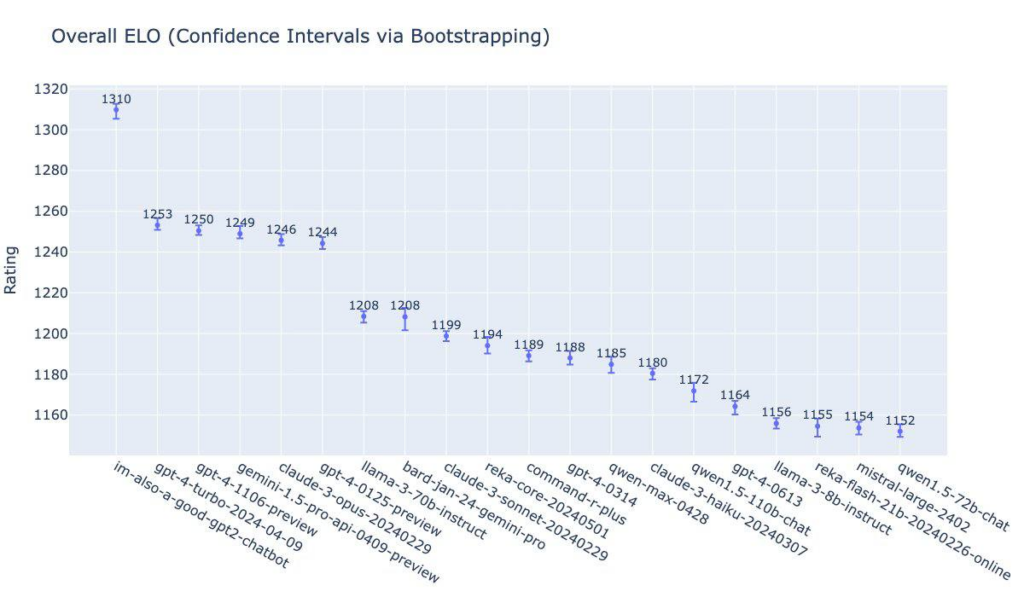

Over the course of several weeks, enigmatic models labeled “gpt2,” “im-a-good-gpt2-chatbot,” and “im-also-a-good-gpt2-chatbot” surfaced one by one on the primary LLM leaderboard, LMSYS. While the company behind them wasn’t specified, Sam Altman’s Twitter hints made it quite apparent.

Users were astounded by the caliber of responses from these mysterious models. The last one could effortlessly generate a full-fledged game, with users creating perfect clones of Flappy Birds, a 3D shooter, and an arcade game in mere minutes.

Of course, it’s now clear that this was all a disguised . On Chatbot Arena, it currently boasts a lead of 57 ELO on general language tasks and 100 ELO on code compared to its rivals. These results are nothing short of remarkable. Moreover, the model doesn’t just rely on its own knowledge; it also incorporates information from the internet to formulate responses.

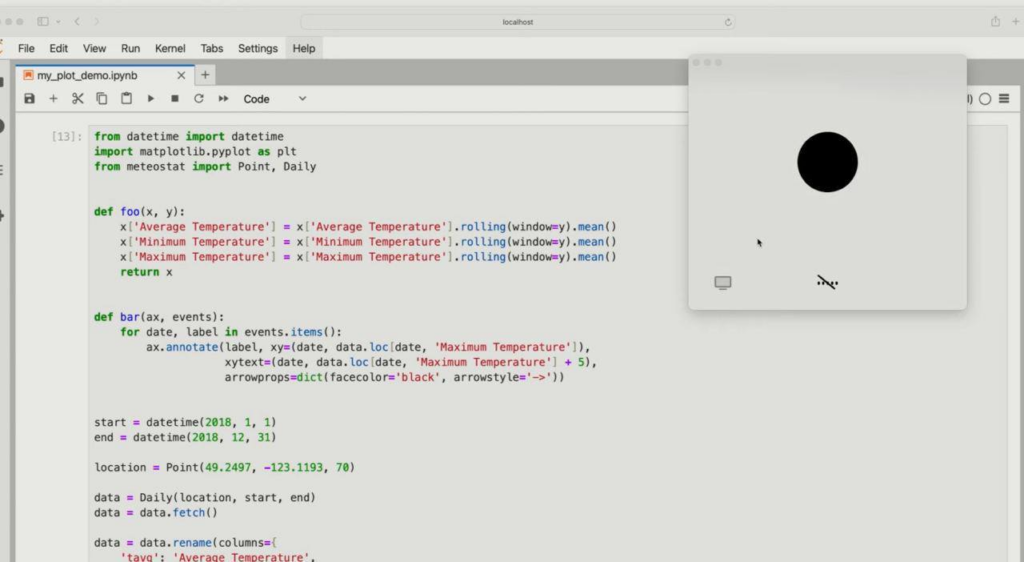

Oh, and here’s another exciting update: the model now offers a streaming mode for MacOS. Simply connect the application, highlight the code directly on your screen, and the model processes it instantly.

But wait, there’s more! The model has also made significant improvements in its handling of images and videos:

- Synthesizing 3D objects

- Sophisticated analysis of charts, diagrams, tables, and handwritten text

- Ability to not only generate images but also remember character appearances, enabling the creation of a complete comic

So, we now have at our fingertips a new, incredibly powerful GPT-4o capable of working with text, voice, images, and video. But isn’t this the company’s first multimodal model? GPT-4 Turbo could also analyze and generate images and listen to voice. However, it turns out that these are entirely “different” types of multimodality. Let’s break it down.

What Makes the First Authentic Multimodal Model?

The ‘o’ in GPT-4o stands for “omni,” signifying “universal.” Prior to its introduction, users could send images to the bot and utilize voice mode. However, the response delay was around 3 seconds. Now, the model responds at human speed.

The key difference is that previously, “multimodality” was not integrated within a single model but rather dispersed across three separate ones:

- A Speech-to-Text model converted audio into text

- GPT-3.5 or GPT-4 then processed the transcribed text as if it had been typed by the user and generated a response

- The final model translated the text back into audio

As a result, the model was unable to comprehend people’s moods and tones or mimic intonations and other sounds.

Everything has changed now. GPT-4o is trained concurrently on three modalities: text, audio, and images. They all coexist and operate together. This is why GPT-4o can be considered the company’s first genuine multimodal model.

Read more on CrunchDubai:

How Can Such a Powerful Model Be So Affordable?

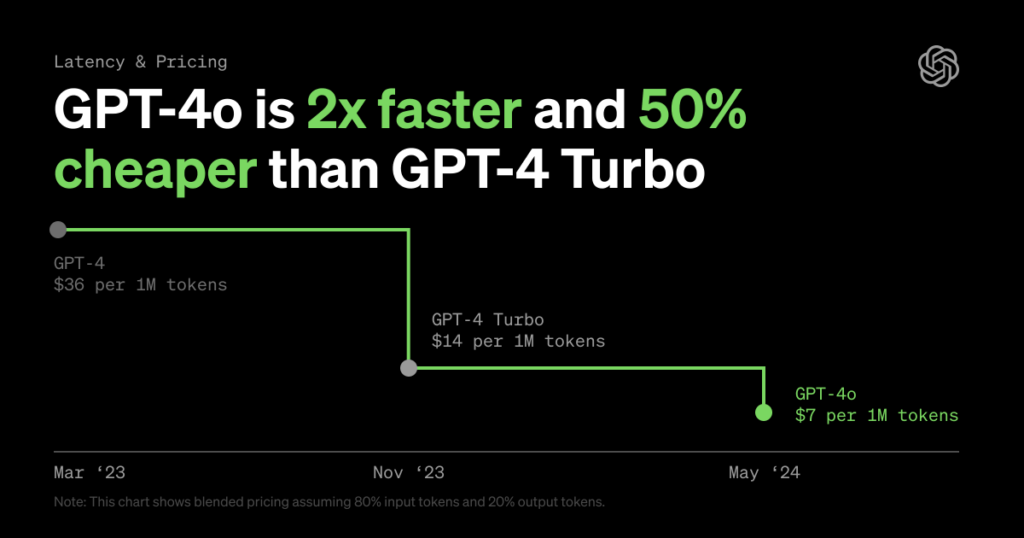

For free users of the bot, the model won’t just be inexpensive; it will be entirely free. Through the API, token processing will be twice as cheap as . So, the new model is smarter, faster, and more affordable. How is this possible?

Yes, it is possible. In fact, prices have been declining even before, as illustrated in the graph:

This time, the cost reduction was primarily achieved through the new multilingual tokenizer, which more effectively “compresses” the input. For some languages, 3-4 times fewer tokens are now required, resulting in lower processing costs for prompts. For instance, processing in the Russian language will cost an average of 3.5 times less.

Is All of This Available for Testing?

Not quite yet. As is customary, OpenAI gradually rolls out announced updates. The model is already accessible to developers via API, and access to text generation with GPT-4o for regular users has also started to be slowly distributed.

Apart from that, the bot still employs the approach of using three separate models. The voice mode from the movie “Her” demonstrated during the presentation has not been activated yet. It will be available to Plus subscribers and will be rolled out over the coming weeks. Video capabilities are currently only provided to select user groups.

OpenAI has also announced plans to release a PC application.

#GPT4o #ArtificialIntelligence #AILanguageModels #MultimodalAI #VoiceAI #VideoAI #NaturalLanguageProcessing #NLP #Programming #Coding #ImageAnalysis #VideoAnalysis #3DObjectSynthesis #HumanAIInteraction

Vasilii Zakharov

GPT-4o represents a monumental step forward for AI. While we celebrate this advancement, a measured and responsible approach is vital to ensure that its benefits are shared and ethically. The future of AI is not predetermined. This is shaped by our collective choices and actions.